The QA Chamber of Horrors: Cautionary Tales for Software Leaders

Learn from real QA disasters that cost millions. Discover critical testing lessons from database failures, flaky automation, and deadly UI bugs.

It's the spooky season, and while many are worried about ghosts and goblins, the true nightmare for any software professional is a critical bug slipping into production.

The testers in the trenches, on Reddit, the Ministry of Testing forums, and in QA slack channels everywhere, know the chilling truth: some software horrors are not mythical. They are real, and the cost can be measured in millions, lost jobs, or even worse.

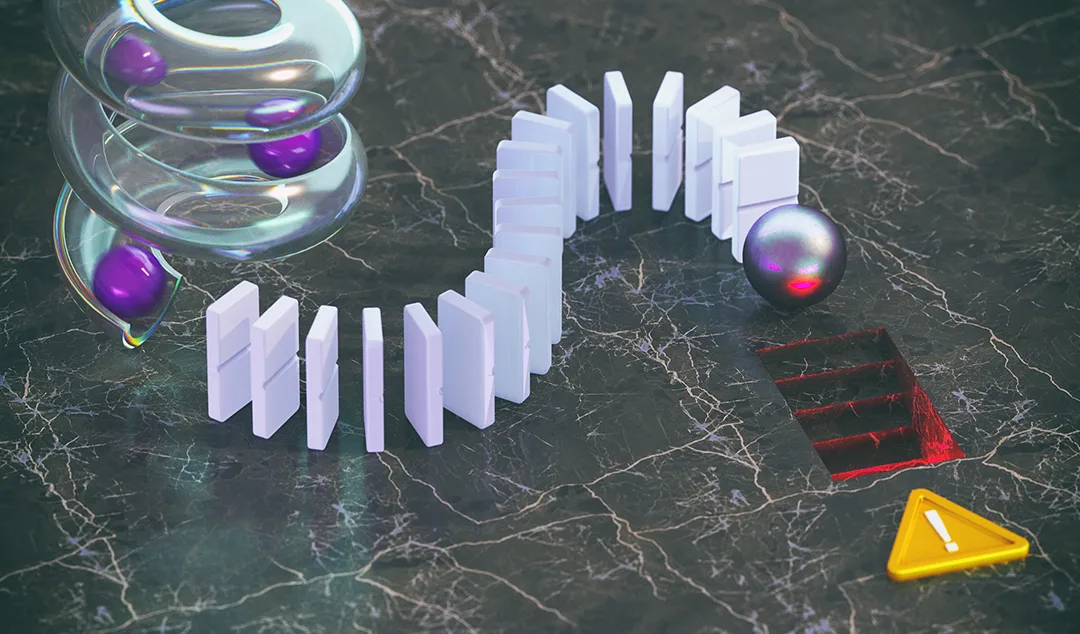

For a bit of seasonal fun and a serious lesson, we’ve opened the QA Chamber of Horrors to curate four true tales of testing woe. Read these stories shared by the community or recorded in infamy and take a lesson back to your team. Because the scariest thing about these monsters is that they were once just small, overlooked defects.

Horror Story 1: The Ghostly Database Graveyard

The Fear: Uncontrolled Data Growth & The Silent Killer

A tester, new to a legacy system, noticed the database size was... enormous. When deleting a "page" or "post" from the CMS, the page itself was deleted, but un unbeknownst to the tester, all of its underlying components, images, paragraphs, and nested items were left behind.

- The Bug: The deletion routine only targeted the top-level parent record, creating millions of orphaned, "dead" entries in the database.

- The Horror: The database backup and restore times grew monstrously long. No one could pinpoint why, and every new deployment brought a lingering dread that they might crash the system trying to restore from a backup. The team was sitting on a ticking time-bomb of data that was a "few million dead entries" large, requiring a terrifying, one-off script to clean up on the live production environment.

- The Lesson: Test the cleanup! Negative testing (what happens when a user deletes something) must include verifying that all related data is removed or correctly archived. Data integrity and database performance are silent features that can turn into production-crushing disasters.

Horror Story 2: The Flaky CI/CD Specter

The Fear: The Intermittent, Untraceable Failure

The Automation Engineer was constantly haunted by a specter: the flaky test. Locally, a test would run 100 times without issue. But once it entered the continuous integration (CI) pipeline, running on a fleet of Jenkins-managed emulators no less, it would fail randomly and intermittently.

- The Bug: The failure wasn't in the code under test or even the test code. It was in the fragile CI environment, the network, or the virtual machine itself ("Jenkins voodoo"). Errors included "Element not found" or "Timed out," masking deeper, environmental problems.

- The Horror: The team became trained to ignore failures. A shared spreadsheet of "known flaky tests" was created, which grew and shrank constantly. Developers would bypass automation engineers, asking "Is this one of the flaky ones?" The true disaster was the erosion of trust in the test suite itself. When a real, critical regression was caught, it was often dismissed as "just another flake."

- The Lesson: A flaky test is worse than no test. Establish a zero-tolerance policy for flakiness. Flaky tests reduce confidence, increase maintenance burden, and train your team to ignore your most valuable warning system.

Horror Story 3: The Untested Deployment of Doom

The Fear: The Manager Who Blames the Gatekeeper

A QA manager was under pressure to ship a new feature. They instructed a QA engineer to test workflows across "all customer staging environments," but with no documentation, no test plan, and a ridiculous deadline.

- The Bug: Almost every staging environment was misconfigured or broken. The QA engineer struggled to distinguish between a new product bug and an infrastructure issue. Despite the reported bugs, the release lead decided to deploy straight to production.

- The Horror: The production environment was in a "bad state," and a critical, last-minute regression slipped through. The manager, ignoring the faulty staging environments and lack of process, yelled at the QA engineer for "not stopping the deployment" and "not catching something obvious."

- The Lesson: Quality is not a department; it's a team commitment. QA is not the sole "gatekeeper." When you ignore broken environments, rush the process, and provide zero guidance, the entire team shares the blame for the eventual customer disaster. Support your testers with the environments, documentation, and authority they need to succeed.

Conclusion: Don't Let Your Team Be Haunted

These horror stories reveal fundamental testing gaps that modern QA teams must address:

Data Integrity Validation

Implement comprehensive database testing that verifies complete data lifecycle management, including cleanup processes and performance impact monitoring.

Test Execution Reliability

Establish robust CI/CD infrastructure with consistent test environments and choose an automation solution that has machine learning based self-healing. Unreliable automation is counterproductive and undermines team confidence.

Collaborative Quality Culture

Shift from individual blame to team accountability. QA professionals require proper environments, documentation, and organizational support to succeed.

User Experience Under Stress

Conduct usability testing that simulates high-pressure scenarios and system latency to ensure interfaces communicate accurate information when it matters most.

Transform Your Testing Approach

These real-world disasters highlight the critical importance of comprehensive testing strategies that go beyond functional verification. Modern testing teams need scalable, AI-powered automation platforms that can handle complex scenarios while maintaining reliability and accuracy.

Consider how advanced testing platforms can help prevent these horror stories:

- Automated data integrity validation that detects orphaned records and performance degradation

- Self-healing test automation that adapts to environmental changes and reduces flakiness

- Comprehensive cross-platform testing that ensures consistent quality across all deployment targets

- AI-driven test creation that identifies edge cases and stress scenarios human testers might miss

Don't let these testing nightmares become your organization's next disaster story. Invest in robust testing infrastructure, establish clear quality processes, and empower your QA teams with the tools and authority they need to protect your users and your business.