How NLP Will Drive the Future of Software Testing

NLP software testing makes it easier for teams compile, edit, manage, and automate all test cases and tightly integrate them with dynamic delivery pipelines.

As Functionize debuts its NLP feature set, it’s important that our readers understand how natural language processing has been changing our world—and how you soon be able to leverage NLP to further improve automated testing of your software applications.

In this article, we look at the challenges of conventional requirements-based testing, recent industry advancements in NLP, and what NLP innovations you can expect from Functionize in the near future.

Test case design and production is largely a manual activity that consumes as much as 70% of a software test life-cycle. Test cases written by testers that lack experience often don’t provide adequate coverage of functional requirements. In addition, changes to requirements expose the inability to reuse manually-written test cases, and the rewrites translate into schedule slippage and cost increases. Many software projects follow some form of behavior-driven development (BDD) to requirements definition as it progresses from the business stakeholders to user stories that are written in natural language.

User requirements must eventually be rendered into a natural language format—so that they are easy to understand and implement by humans. There are limitations to natural language test cases because an incorrect interpretation of those cases can induce verification errors.

In an attempt to minimize such errors, requirements might be converted from natural language constructs into a computational model that employs the Unified Modeling Language (UML)—which has long been a standard in object-oriented software design. Such model-based testing facilitates upstream test case generation so that developers can participate and more readily identify inconsistencies that will serve to improve the design. The challenge here is that UML diagrams can be elaborate, tedious, and require special skills to craft and interpret.

Good requirements are essential for reliable testing

Testing ensures that an application performs according to design and common user expectations. Largely, this is done by (a) verifying correct function in all pathways in the application, and (b) identifying all relevant data that will validate all possible usage contexts. The test suite must aim to provide full coverage of all paths and all types of data. This means that user and system requirements must have sufficient detail to enable test designers to craft high-quality test cases. As technological complexity continues to increase, there is an acute need for an automated test case generation solution that can keep a lid on costs while providing adequate coverage of elaborate software applications and systems. More on that later in the article. Let’s have a closer look at the link between requirements and testing.

Requirements are the foundation for all software development efforts since they define the new functionality that the stakeholders expect and how the application will behave for the users. Most of the problems that arise in building and refining a software product are directly traceable to errors in requirements. Poor requirements definition—often the result of too many changes—is a major cause of software project failures.

Natural language requirements

Using natural language to document the requirements of complex software products entails these critical problems:

- Ambiguity

- Imprecision

- Inaccuracy

- Inconsistency

- Incomplete definitions

Critics contend that natural language requirements are the vestiges of archaic practices that persist because of poor education or inadequate analysis methodology. The remedy, say these critics, is to (manually) translate NL requirements into innovative logical schemes. This, they assure us, will improve analysis, implementation, and testing. However, this is a daunting and error-prone prospect for developers and testers that have no training in such formal and tedious methods.

While it is perhaps undeniable that requirements formalization is a prerequisite to any valuable analysis activity, manual formalization is impracticable for any non-trivial software development effort. Critics also argue against automating requirements formalization using natural language processing NLP because the techniques are too immature and inadequate for such tasks. Such dissenters are likely unaware that huge advancements have happened in recent years. NLP and text-mining technologies made giant leaps forward, which is manifestly evident by their use in diverse government and commercial applications—ranging from web search to aircraft control to voice-activation. In fact, Functionize is now exploiting NLP advancements to further improve its testing automation platform.

What is NLP?

Natural language processing is an artificial intelligence specialty by which machines can identify, interpret, and generate human language. NLP arises from the intersection of many disciplines such as computational linguistics and computer science. The aim of NLP is to build machines that can approximate human language comprehension.

The evolution of NLP

Though NLP isn’t new, the technology has been quickly accelerating because of increasing interest in communication between humans and machines. Interactive, high-volume NLP has become quite feasible with the availability of very powerful computing resources, high-performance algorithms, and readily accessible big data.

Humans read, write, and speak in at least one language such as English, Chinese, Korean, Spanish, or French. Machine code—which is the native language of computers—can’t be understood by most people. Machines communicate by means of a series of millions of bits that represent instructions that correspond to software behaviors.

In the 1950s, programmers would manage punch cards to direct computers to perform specific tasks. This tedious process was known to only a few specialists. In stark contrast—in the year 2018—you can ask your phone to listen to a song in a cafe and reply with the name and the artist. Your phone may then prompt you to speak the category in which you want to place this song in your music library. You can tell the device to remind you in the future to play this song when you open your music app.

Looking more closely at this interaction. It is entirely unnecessary to tap on your phone. Your device activates when it hears you prompt it; the device “understands” the subtlety in your words; executes several specific actions, records future directives, and gives feedback in a proper sentence—in a few seconds of time. The essence of this communication exchange is possible because of NLP—together with machine learning—and possibly deep learning—algorithms. Functionize NLP brings similar technology to bear on the challenges of software testing automation.

How does NLP work?

Human language is immensely diverse and complex. There are hundreds of languages—each of which contains a vast array of letters, words, distinctive grammar, idioms, syntax rules, slang, and alternative words and phrases. Written language often contains misconstruction, misspelling, abbreviations, and incorrect. Speech exhibits regional accents, mumblings, stuttering, and sometimes mixed languages.

Supervised and unsupervised deep learning are now used extensively for modeling human language, but there is a critical and complementary need for syntactic and semantic comprehension that goes beyond the machine learning domain. Specialized and focused NLP is the key to resolving language ambiguities and augments the data with numeric structure to support a variety of downstream applications—including text analytics and speech recognition.

Elemental language decomposition

Basic NLP tasks include parsing, tokenization, lemmatization, stemming, speech-element tagging, language detection and semantic-relationship identification.

These intermediate-level tasks often combine to support more elaborate NLP/NLU features and processes:

- Discovery and modeling — Precise capture of themes and meanings in collections of text, then optimize and forecast.

- Extraction — Automatically discern and pull information from textual sources.

- Categorization — Linguistic document summarization and indexing; identify duplicates.

- Sentiment analytics — Identify moods or opinions within text, optionally perform average sentiment analysis and opinion assessment.

- Speech-to-text / text-to-speech — Convert speech into text and optimize—and vice versa.

- Human language translation — Automatic translation of text or speech from one language to another.

NLP applications

Though you may not be aware, there are now many common applications of NLP. This goes beyond virtual assistants such as Siri or Alexa to examples such as these:

- Seen the transcripts of voicemails in your email inbox? That’s the result of NLP speech-to-text conversion.

- Have a look at the messages in your spam folder, looking for patterns among the subject lines. Beyond the types of emails that you explicitly filter, the others in this folder a result from Bayesian spam-filtering, which depends on statistical NLP techniques.

- When you navigate a website using its search bar, you’re enjoying the benefits of NLP search, modeling, extraction and categorization methods.

NLP has grown to encompass what is known as natural language understanding (NLU)—which goes beyond language structure to precisely discern intent, context, ambiguity, and optionally generate human language. NLU algorithms must manage the highly complex task of semantic interpretation–seeking to identify the intended meaning of language. This includes handling all of the inferences, suggestions, idioms, and subtleties that humans employ in written text and speech.

Functionize brings the power of NLP to test automation

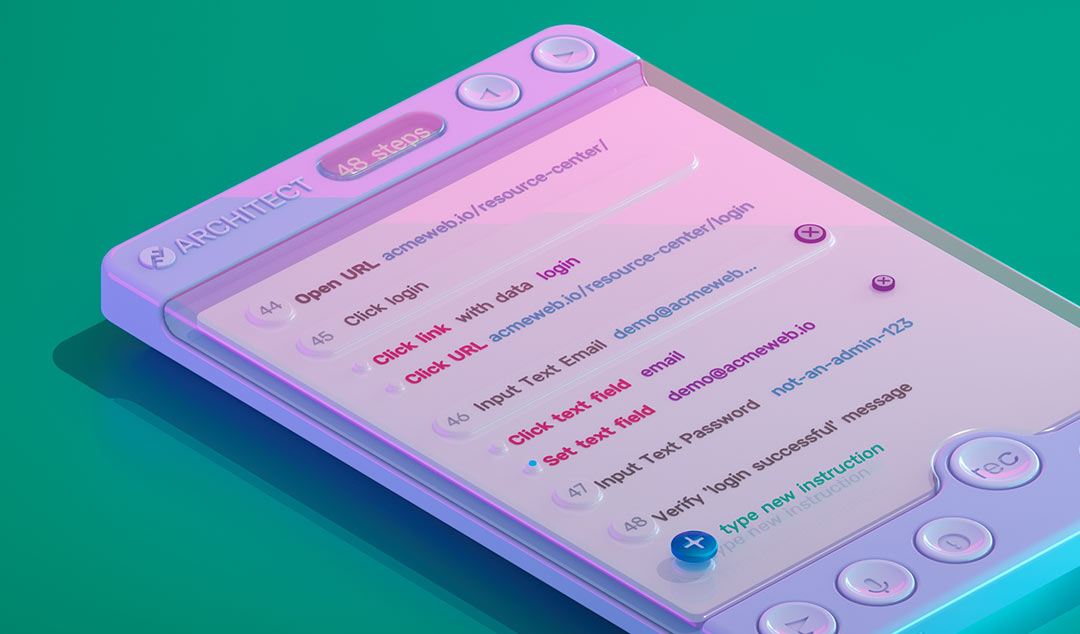

As we wrote recently, NLP technology is now at the heart of the Functionize testing automation platform. It’s never been easier to create, edit, manage, and automate all of your test cases and tightly integrate them with your dynamic delivery pipeline.

The Functionize NLP engine ingests human-readable, preformatted test cases that have been written as simply and naturally as you might have them already in a Microsoft Word or Excel documents. It’s as simple as writing “Verify that all currency amounts display with a currency symbol.” Anyone on the entire development team can write statements like these. Indeed, most companies already have a written collection of statements that articulate their collective use cases and user journeys. Many such statements can be easily combined and fed into the Functionize NLP engine, which rapidly and intelligently processes the entire set of test cases—and generates each of the steps for the test case. These are easily maintainable as your software product continues to evolve.

Functionize combines all phases of testing into a smooth, seamless testing experience. When combining NLP with the AI and machine learning that are integral to the Functionize platform, the result is accurate, meaningful, and flexible testing that enables greater delivery momentum. The Functionize autonomous test platform captures and reports all aspects of the user experience. This enables companies to deliver a superior experience, increase customer satisfaction, and drive the business forward.

Learn more about the benefits of NLP in test creation and maintenance.